Introduction: Exploring the New Frontiers of AI in Software Development

Just a month ago, I shared insights on LinkedIn about the transformative potential of Large Language Models (LLMs) for software development, focusing particularly on Anthropic’s Claude 3 previous post. This powerful tool, with its expansive 200,000 token context window, hinted at a new horizon where developers could engage with about 30,000 lines of code seamlessly, vastly simplifying code management and extension processes through simple prompts. The feedback and interactions following that post revealed a keen interest in the practical applications and real-world effectiveness of such technologies.

Motivated by this interest and the ongoing evolution in AI-driven development tools, I continued a deeper exploration into how these technologies could further integrate into and enhance the software development lifecycle. I began with an existing open-source project of mine, koalixcrm , which had not seen active development from me personally in over three years. Utilizing Jetbrains’ AI Assistant https://www.jetbrains.com/ai/ , I managed to update major versions of Django and Python, refactor the Docker usage, and resolve several longstanding and challenging bugs—all within a mere 8 hours.

This success led me to experiment with additional AI Tools. One of which was the SWE-Agent, an AI Agent designed to for autonomous bug-fixing. These experiences have not only reaffirmed the capabilities of LLMs and AI agents in modern software development but have also driven me to delve deeper into their strategic application. This article aims to explore and discuss these technologies’ profound impacts, setting the stage for a future where AI is an integral part of every software development team. Let’s begin this exploration together, examining how the latest AI tools can reshape the landscape of technology development and deployment.

Detailed Benefits of LLMs in Development

The integration of Large Language Models (LLMs) like Claude 3 and AI assistants into software development processes has been transformative. For me, my personal experience with these tools in updating and refining koalixcrm serves as a testament to their effectiveness and efficiency. One of the most significant advantages of LLMs is their ability to facilitate rapid bug fixes and major refactoring’s. For instance, while updating koalixcrm, the AI Assistant from JetBrains enabled me to resolve three complex bugs that emerged from updating major Django versions. This tool helped me easily identify the issues and provided solutions which I was able to implement directly.

LLMs also helps in prototyping and code conversion, which I explored by converting several Bash scripts into Python code using only a few prompts. This conversion streamlined the script’s functionality but also integrated more seamlessly with the rest of the project’s Python-based architecture. The ability of LLMs to understand and manipulate code across different languages underscores their role as indispensable tools in modern software development, enabling me as developer to focus more on strategic tasks and less on routine coding.

Challenges Uncovered: Navigating the Limitations of LLMs

While the advantages of Large Language Models (LLMs) are substantial, my testing also brought to light several critical limitations that temper the enthusiasm surrounding these technologies. One significant challenge is the context size limitation. Despite the impressive ability of models like Claude 3 to handle up to 200,000 tokens, this still restricts the amount of code and documentation that can be processed in a single instance. This limitation often necessitates breaking down larger projects into smaller, manageable segments, which can complicate the workflow and reduce efficiency.

Another even more constraining issue is the maximum output size that LLMs can generate. During the refactoring of koalixcrm, there were instances where the desired code modifications or extensions exceeded the output limits of the LLM, requiring multiple prompts and manual assembly of code snippets. This segmented approach can introduce errors and inconsistencies, posing a challenge for maintaining code integrity.

Moreover, integrating these AI solutions into complex existing systems presents its own set of challenges. The initial misconception that LLMs could seamlessly plug into any stage of software development without substantial setup or oversight was quickly dispelled. Effective integration often requires significant preparatory work to define the scope and structure of tasks suitable for AI intervention, as well as ongoing management to align the AI-generated outputs with the project’s architectural and functional standards.

These challenges highlight that while LLMs are powerful tools, they are not a free of charge solution. They require thoughtful integration and management to fully leverage their capabilities within the complex ecosystem of software development.

AI Agents as a Solution: Enhancing Capabilities through Strategic Integration

AI agents represent a significant advancement in the realm of software development, offering a more dynamic and autonomous approach to coding and system management. These agents, like SWE-Agent and Devin AI, are software systems designed to perform complex tasks autonomously. SWE-Agent, for instance, has demonstrated its ability to autonomously fix bugs in code with a notable success rate, streamlining the debugging process significantly https://www.unite.ai/. Devin AI takes this a step further by handling entire software engineering projects from start to finish, showcasing an impressive ability to learn new technologies and integrate them into existing systems https://devinai.ai/.

However, for AI agents to be truly effective, specific architectural and scope management strategies are necessary. Firstly, defining clear and manageable scopes for AI tasks is crucial to prevent overload and ensure precision. AI agents perform best when tasks are well-defined and within the capabilities of their programming and learning algorithms. Secondly, the architectural integration of these agents into existing systems must be handled with precision. This involves setting up interfaces and protocols that allow AI agents to interact seamlessly with other parts of the software infrastructure, ensuring that their interventions are both appropriate and optimally timed.

These strategies are essential for maximizing the benefits of AI agents, allowing them to complement and enhance human efforts in software development rather than simply automating tasks. This integration not only increases efficiency but also improves the overall quality of software projects, pushing the boundaries of what can be achieved with AI in software development.

Cost Analysis and Feasibility: Weighing AI Against Traditional Methods

Exploring the financial implications of integrating AI in software development requires considering cost-effectiveness, feasibility, and scalability. For example, using GPT-4 to fix a simple error in the README.md file costs about $4 per operation. However, given that SWE-Agent with GPT-4 currently fixes only 12.29% of issues, the effective cost for each successful bug fix averages around $32. While this may not represent a significant saving initially, the availability and scalability of AI tools like SWE-Agent and Devin AI mean they can handle multiple such tasks simultaneously without additional onboarding costs, presenting substantial long-term savings and efficiency gains.

Additionally, for SWE-Agent to be utilized effectively, the target software must meet specific criteria: it must be written in Python, run in a Docker container, and have robust automated test cases. With further investment, the principles used in SWE-Agent can be adapted to develop versions that support other software languages, broadening the applicability of this AI technology.

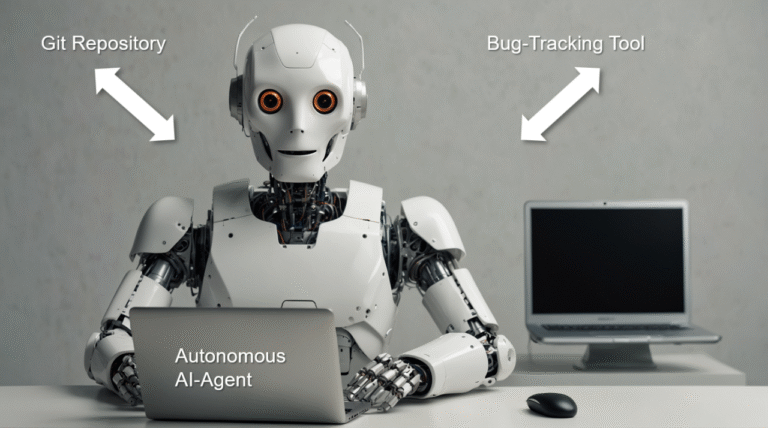

Vision for the Future: Predicting Changes in AI Development

The future of AI in software development is poised for significant advancements. I expect AI agents to outpace the developments in LLMs in the near term. The current success rate of 12.29% for bug fixes is likely to increase rapidly with improved AI agent strategies and workflows. I envision a near future where every code repository and issue management system are connected with an autonomous AI agent. These agents will continuously monitor the issue management systems and proactively attempt to resolve reported issues, transforming the landscape of software maintenance and development. This shift towards autonomous, AI-driven operations promises to revolutionize how software development and maintenance are approached, enhancing efficiency, and reducing the time developers spend on code-writing tasks.